Unraveling Seventeen Billion Four Hundred Seventy-One Million Numbers

<!DOCTYPE html>

Have you ever wondered about the sheer magnitude of seventeen billion four hundred seventy-one million numbers? Whether you’re a data scientist, mathematician, or simply curious, understanding such vast quantities can be both fascinating and challenging. This blog will guide you through the concept, its applications, and how to work with such large datasets effectively. (large numbers, data analysis, big data)

Understanding the Scale: Seventeen Billion Four Hundred Seventy-One Million

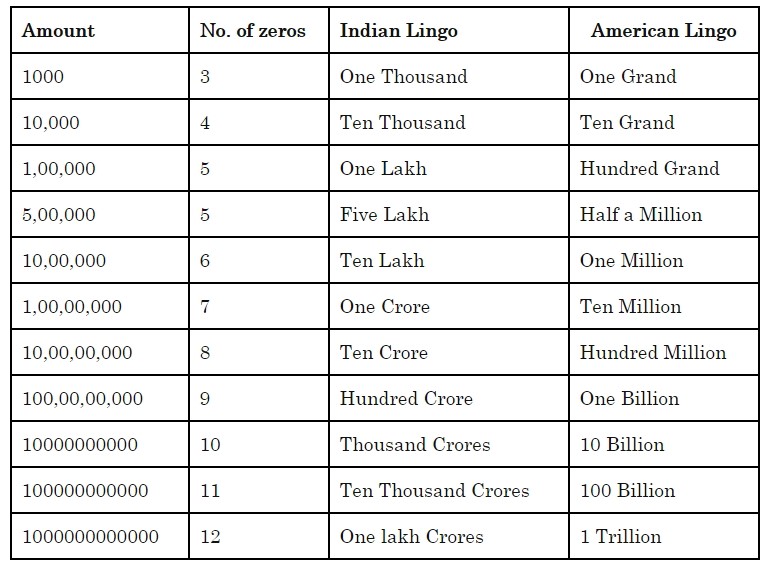

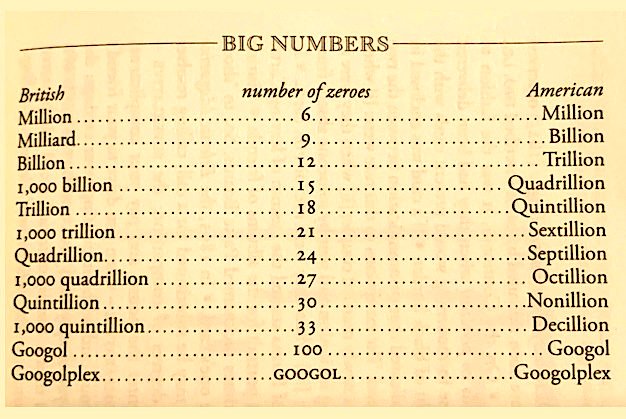

The number seventeen billion four hundred seventy-one million (17,471,000,000) is more than just a string of digits. It represents a scale that’s hard to comprehend without context. To put it into perspective, consider that this number could represent:

- The population of multiple countries combined.

- The total number of transactions in a global financial system.

- The amount of data generated daily by large tech companies.

Understanding this scale is crucial for anyone dealing with big data, statistics, or large-scale computations. (big data, statistics, large-scale computations)

Applications of Large Numbers in Real-World Scenarios

Data Analysis and Machine Learning

In data analysis and machine learning, datasets often reach into the billions of entries. For instance, training a machine learning model might require processing seventeen billion data points. Tools like Python, R, and specialized databases are essential for handling such volumes efficiently. (data analysis, machine learning, Python, R)

Financial Systems and Transactions

Financial institutions process millions of transactions daily, with global systems handling numbers like seventeen billion four hundred seventy-one million regularly. Ensuring accuracy and security in these transactions is paramount. (financial systems, transactions, security)

How to Work with Large Numbers Efficiently

Working with such large numbers requires the right tools and techniques. Here are some key strategies:

- Use Specialized Software: Tools like Python’s Pandas or SQL databases are designed to handle large datasets.

- Optimize Algorithms: Efficient algorithms reduce processing time and resource usage.

- Data Compression: Techniques like gzip or bzip2 can reduce storage and processing requirements.

💡 Note: Always test your tools and algorithms on smaller datasets before scaling up to billions of entries.

Challenges and Solutions in Handling Large Datasets

Handling seventeen billion four hundred seventy-one million numbers isn’t without challenges. Common issues include:

- Memory Limitations: Large datasets can exceed system memory, requiring distributed computing solutions.

- Processing Speed: Analyzing billions of entries can be time-consuming without optimization.

- Data Integrity: Ensuring accuracy across such vast datasets is critical.

Solutions include using cloud computing, parallel processing, and robust data validation techniques. (cloud computing, parallel processing, data validation)

Checklist for Managing Large Numbers

Here’s a quick checklist to help you manage large numbers effectively:

- Choose the right tools for your task (e.g., Python, SQL, Hadoop).

- Optimize your algorithms for efficiency.

- Implement data compression techniques.

- Use distributed computing for large datasets.

- Regularly validate data integrity.

In summary, seventeen billion four hundred seventy-one million numbers represent a scale that’s both daunting and exciting. Whether you’re analyzing data, processing transactions, or building models, understanding how to work with such large quantities is essential. By leveraging the right tools and techniques, you can turn these numbers into valuable insights. (data analysis, big data, machine learning)

What tools are best for handling large datasets?

+Tools like Python (with Pandas), SQL databases, and Hadoop are ideal for handling large datasets efficiently.

How can I optimize processing speed for large numbers?

+Use optimized algorithms, parallel processing, and consider distributed computing solutions like cloud platforms.

Why is data integrity important in large datasets?

+Data integrity ensures accuracy and reliability, which is crucial for decision-making and analysis in large-scale projects.