Large Language Models for Regression Tasks Explained

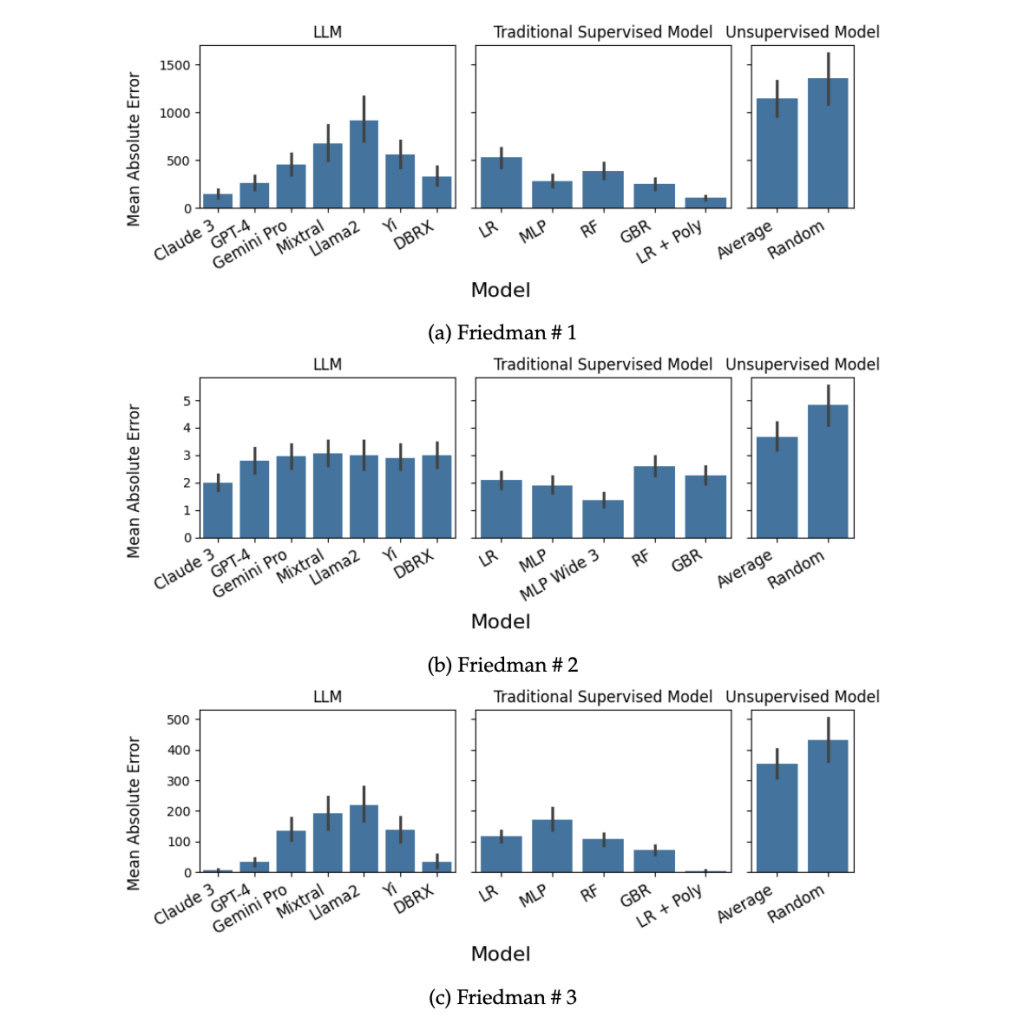

Large Language Models (LLMs) have revolutionized natural language processing, but their application in regression tasks is a topic of growing interest. Traditionally, regression tasks—predicting continuous numerical values—have relied on statistical models and machine learning algorithms. However, LLMs, with their ability to understand and generate human-like text, are now being adapted for these tasks. This blog explores how LLMs can be leveraged for regression, their advantages, challenges, and practical applications, catering to both informational and commercial audiences. (large language models, regression tasks, machine learning)

Understanding Regression Tasks in Machine Learning

Regression tasks involve predicting a continuous outcome variable based on input features. Common examples include predicting house prices, stock market trends, or sales forecasts. Traditional models like Linear Regression, Decision Trees, and Neural Networks are widely used for these tasks. However, LLMs offer a new paradigm by processing textual data and extracting patterns that can enhance predictions. (regression analysis, predictive modeling, machine learning algorithms)

How Large Language Models Adapt to Regression Tasks

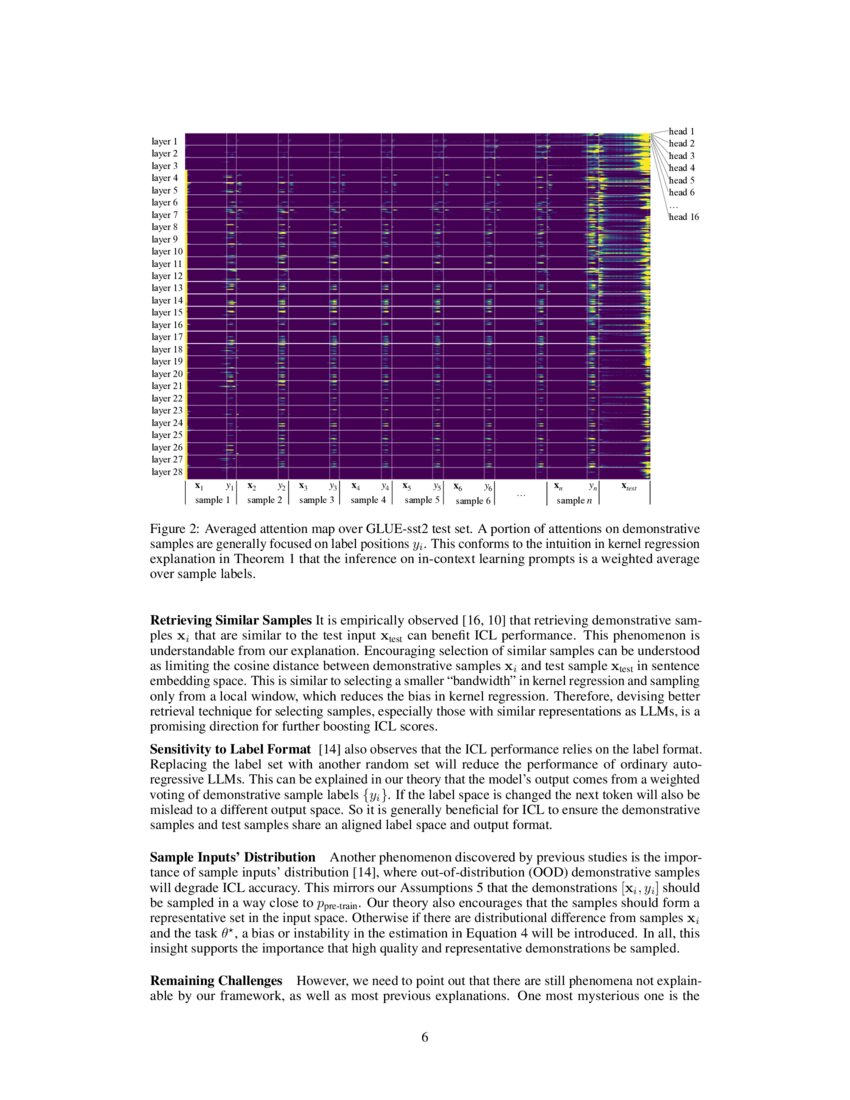

LLMs, such as GPT and BERT, are primarily designed for text generation and classification. To adapt them for regression, additional layers or fine-tuning techniques are employed. For instance, a pre-trained LLM can be augmented with a regression head—a neural network layer that outputs numerical values. This hybrid approach allows LLMs to process textual inputs and produce continuous predictions. (LLM fine-tuning, regression head, neural networks)

Steps to Implement LLMs for Regression

- Data Preparation: Convert input features into text format or use textual data directly.

- Model Selection: Choose a pre-trained LLM suitable for your task.

- Fine-Tuning: Train the model on your dataset with a regression head.

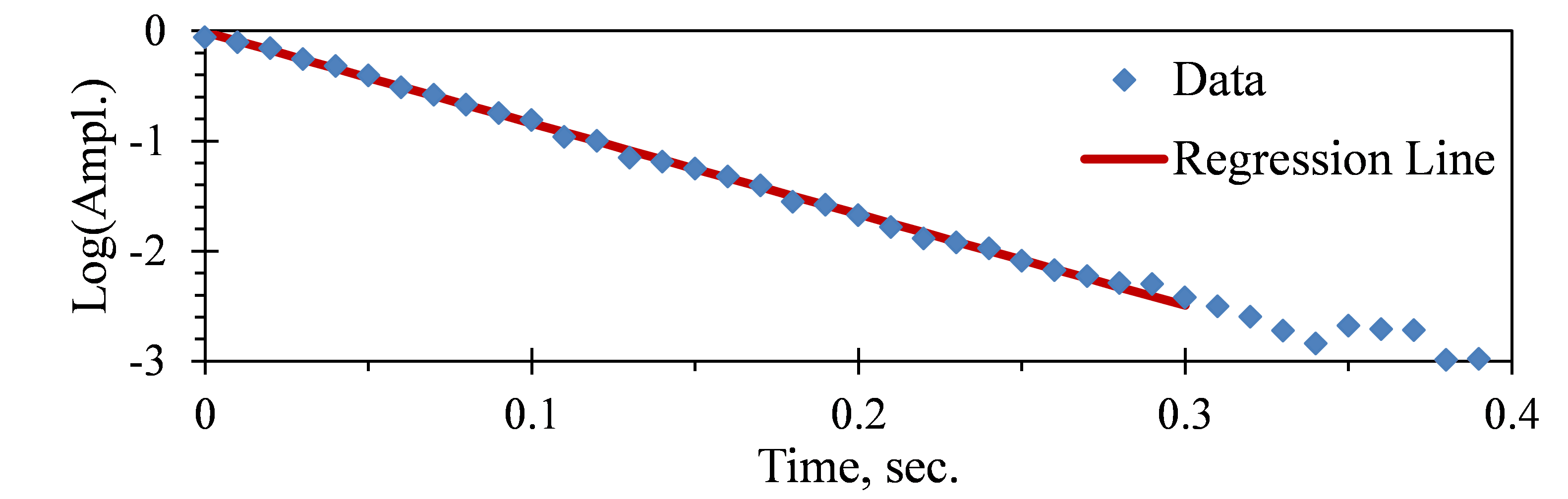

- Evaluation: Use metrics like Mean Squared Error (MSE) or R² to assess performance.

📌 Note: Ensure your dataset includes both textual and numerical data for effective training.

Advantages of Using LLMs for Regression

- Contextual Understanding: LLMs can capture nuances in textual data, improving predictions.

- Versatility: They can handle multimodal data (text, numbers, etc.) seamlessly.

- Scalability: Pre-trained models reduce training time and resource requirements.

For businesses, LLMs offer a competitive edge by enabling more accurate forecasts and data-driven decisions. (data-driven decisions, predictive analytics, business intelligence)

Challenges and Limitations of LLMs in Regression

While LLMs show promise, they face challenges such as:

- Overfitting: Complex models may perform poorly on unseen data.

- Computational Cost: Training and deploying LLMs require significant resources.

- Interpretability: Understanding how LLMs arrive at predictions remains a challenge.

Addressing these limitations is crucial for widespread adoption. (overfitting, computational resources, model interpretability)

Practical Applications of LLMs in Regression

LLMs are being applied across industries for regression tasks:

| Industry | Application |

|---|---|

| Real Estate | Predicting property prices based on descriptions and location data. |

| Finance | Forecasting stock prices using news articles and market trends. |

| Healthcare | Estimating patient recovery times from medical records and notes. |

These applications highlight the versatility of LLMs in real-world scenarios. (real estate analytics, financial forecasting, healthcare predictions)

Checklist for Implementing LLMs in Regression Tasks

- Prepare textual and numerical data for training.

- Select a pre-trained LLM and add a regression head.

- Fine-tune the model on your specific dataset.

- Evaluate performance using regression metrics.

- Optimize for challenges like overfitting and interpretability.

Large Language Models are transforming regression tasks by leveraging their ability to process textual data and extract meaningful patterns. While challenges remain, their advantages in contextual understanding and versatility make them a valuable tool for businesses and researchers alike. By following the steps and checklist outlined in this blog, you can effectively implement LLMs for your regression tasks and unlock new possibilities in predictive modeling. (predictive modeling, LLM applications, machine learning innovation)

Can LLMs outperform traditional regression models?

+

LLMs can outperform traditional models when dealing with textual data or multimodal inputs, but their performance depends on the specific task and dataset.

What are the computational requirements for training LLMs?

+

Training LLMs requires significant computational resources, including GPUs and large memory capacities. Pre-trained models can reduce this burden.

How can I improve the interpretability of LLM-based regression models?

+

Techniques like SHAP (SHapley Additive exPlanations) or LIME (Local Interpretable Model-agnostic Explanations) can help understand LLM predictions.